[Source]

[Source]

1) Introduction

2) What is a good programming language?

3) "Intelligence-boosting"

"Divide ut imperare" (Divide and conquer)

Two heads are better than one (facilitate teamwork)

Be more declarative and less procedural (higher abstraction)

A library of "Solution patterns / heuristics"

For the computer: use less memory and CPU cycles

For the programmer: use fewer keystrokes

6) Clear

7) Complete

8) Widely-used

9) Avoid common programming language design pitfalls

Forgetting the nature of engineering (calculated compromises)

Misguided "simplicity"

Write-Only languages

Dog eating its own vomit (self-modifying source code)

Language inventor's pride

How does one go about designing a better programming language? I don't know, otherwise I'd write a "cookbook recipe" to do so, then I'd use that recipe to cook up the best programming language in the history of computers! All I have is "bits and pieces" of knowledge I've gleaned in books and on the Internet. This partial knowledge is what I mean by "design rules". These rules are hopefully relevant for any programming language design effort.

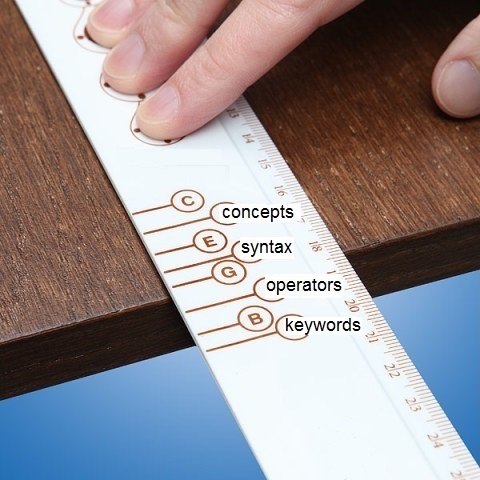

A spoken human language (i.e. a "natural" language) allows transmission of information from a speaker to a listener:

A good natural language has many characteristics. For example, it's complete, i.e. it allows the transmission of anything the speaker wants to tell the listener. A counter-example would be a limited language with so few words that it would only allow a speaker to talk about the weather, sports and movie stars, but not about important topics like philosophy, politics and religion.

A good natural language is also clear, so the message won't be misunderstood. There are no ambiguities. A counter-example would be a language that used the same word to talk about a car's brake pedal, and the accelerator pedal. You can imagine the painful conversations between a student driver and a driving instructor!

Because a message can only be received by somebody who can understand it, a good natural language is widely-used, in other words many people know it. This is why a very well-designed language that nobody speaks is worse (in practice) than a language littered with flaws, but that is understood by a large population.

Building and transmitting a message takes energy, and energy is a scarce resource, so a good natural language is efficient. As a counter-example, imagine a language where, instead of a very brief silence between sounds to separate words, you had to do a push-up! Just saying "hello how are you" would take three push-ups! (I'm sure there are teachers reading this who are thinking: "Wow, this would be the perfect natural language for those impolite students who bother everybody by their constant chatting during class!")

Because we are human persons, therefore born with a blank mind devoid of any knowledge, we can only communicate after having learnt how to! So a good natural language is easy to learn. Also because we are mere humans, our intelligence is very weak, and we need language as a kind of crutch to help us think. In other words, a natural language must not only be complete (i.e. "open a door" in our brains to allow thoughts to come out), but also "intelligence-boosting" (i.e. help us "manufacture" good thoughts inside our brains, including thoughts we've never thought of before!).

A programming language is in many ways similar to a natural language, so a good programming language must be complete, clear, widely-used, efficient, easy to learn and "intelligence-boosting" (for lack of a better expression). But one of the big differences between natural languages and programming languages is that there are two categories of "listeners" for programming languages: the machine you are trying to program, and the other human persons who will try to understand and modify your source code. Machines don't need to learn, and they don't have any intelligence to boost, but the other desireable characteristics of a language apply.

So because of the very nature of languages in general, and programming languages in particular, we can organize design rules for programming languages under these headings (sorted as far as I can in decreasing order of importance). I added one last category for what NOT to do, i.e. the common pitfalls to be avoided in programming language design.

How can a programming language make us "more intelligent"?

I think we have to first distinguish between "intelligence-boosting" strictly speaking, and "just removing burdens on our intelligence". A bit like an athlete who is trying to run faster: he can remove burdens (like lose weight, or buy a better pair of running shoes that is much lighter, or take his telephone and keys and wallet out of his pockets, etc.), or he can actually "boost his running capacity" (like get stronger leg muscles and a bigger heart that pumps more blood so his muscles get more oxygen, etc.) For a programming language, "removing burdens to intelligence" seems similar to all the other categories of language design rules. For example, if a language is hard to learn, you waste more "brain cycles" learning the language than using it (for example, having to go re-read your programming language's manual, because your program won't compile). If it's inefficent, you waste "brain cycles" spelling out what you want in excruciating detail, because your programming language "doesn't take a hint". Unclear? All the effort wasted trying to disambiguate source code! Incomplete? Here again, more "brain cycles" wasted finding some contorted workaround to say things your language doesn't let you say naturally. And so on.

Another distinction seems appropriate, since as far as I know, we are pretty well born with whatever level of intelligence we have. If we assume (for the sake of the metaphor) that a runner is born with whatever leg muscles and whatever heart he has, and cannot increase their power, then the only way he can "boost his running speed" (apart from just removing burdens), is by "artificial" means, like running down a hill with a strong wind in his back.

So what is the equivalent of "running downhill and down wind" for a programming language? It seems to me you can try to "boost intelligence" by making:

- the problem smaller;

- the brain bigger;

- the brain go farther with the same effort (like shifting up on a bicycle);

- the brain able to start moving slowly when it's stuck (like shifting down on a bicycle).

If a problem is too big to solve, you chop it up into smaller sub-problems which individually are easier to solve. This idea is so important in computer science that it goes by many names, like "information hiding", or "modularity", "loose coupling and strong cohesion", "mastering complexity", "increasing proximity", etc.

In order to "chop one big problem into several smaller problems", you need:

- A kind of "magic knife" that can cut a problem;

- Many "magic boxes" in which to place each sub-problem;

- Some way of "magically reconnecting" all the "magic boxes", after each sub-problem

inside them has been solved.

The expression "loose coupling and strong cohesion" refers to the proper usage of the "magic knife". Imagine if you had to cut up a dog and then put it back together again. You would not want to cut the heart, or the kidneys, or the liver into pieces: those organs are very complicated internally. "Strong cohesion" means "all the internal parts of the liver are constantly interacting with each other, so leave them together". "Loose coupling" means "if you just cut the simple veins and arteries going in and out of the liver, you won't have much cutting to do, and reconnection will be much easier".

The expression "information hiding" refers more to the "magic boxes". If you can put a sub-problem inside a "magic box", and only see that box and a few simple "magic connectors" (so you can reconnect that sub-problem to the rest after it has been solved), you have become "more intelligent"! All your intelligence needs to see is one simple box and a few connectors. You have "hidden" a lot of "information" about all the painful and difficult details of the sub-problem.

Another metaphor to explain "information hiding" is juggling: if you have to juggle with ten balls at once, you need a lot of talent! But if you put those ten balls in a box, it becomes very easy to "juggle" by just throwing one box up and down! You have "hidden" the complexity of those ten balls behind a simple interface (a box). You have "boosted your juggling talent".

The expression "increase proximity" is just another way of describing an advantage of sub-problems, which by definition will be smaller than the big problem (so everything will seem "closer").

In my experience, the hardest part about "divide and conquer" is getting a really sharp "magic knife". Often a sub-problem is not completely separated from the rest. You might have the appearance of a large problem neatly divided into several boxes containing the sub-problems, with nice neat connectors on the front of the boxes, ready to be reconnected, so the whole big problem can be solved. But behind the boxes, you have squirrely tendons and nerves still connecting the sub-problems! Nothing has been really divided! If you try to move one box, all the other boxes are dragged along. And if you open a box and poke around in it, another box will bark, or even bite you! (For example, fixing a bug in one part of the program will add a new bug in another, supposedly unrelated, part of the program.)

You cannot increase the size of your brain strictly speaking, but you can try to make your brain "bigger" by adding brains together. In a way, "boosting intelligence" with teamwork is related to many other language design rules in this list:

- "divide and conquer": if you can divide your problem, you

can assign each sub-problem to a different programmer;

- keep things in the same places: if the potato peeler is

always in the top drawer on the left, different cooks can prepare meals more

easily in the same kitchen;

- respect a coding standard: the more your programmers

"speak the same language", the easier they can work on each other's code;

- code reuse: it's hard to collaborate when you don't have

access to other people's work;

- etc.

Are there things that can be done to "add brains together", that are not just repetitions of rules found elsewhere in this list? Are there pure "teamwork facilitation" rules? I'm sorry, I don't know. Please contact me and add your brains to mine if you think of any.

Ideally, you make a programming language as declarative as possible. For example, instead of telling your wife:

RELAX biceps PULL triceps 50% ROTATE elbow 37 degrees OPEN hand PULL triceps 10% CLOSE hand PULL biceps 5%

... and so on, you just say: "Please Honey, could you pass the salt?" Instead of having to be very "procedural" about all the flexor and extensor muscles in your wife's arm, you just "declare" your intention, and that gives you the same result. (Actually, you might get a slap in the face if you tried to be procedural with your wife, but that's not a programming language design problem.)

Being more "declarative" is a lot like shifting up gears on a bicycle. Bicycle transmissions are a technological marvel. You have the same leg muscles, but with the same effort and same pedal turns, you can go faster if you shift up. In programming, our "brain muscles" can only provide so much power to "turn the pedals". In other words, the average number of lines of code produced per day (high-quality lines of code, with few bugs) is pretty much stable. So if you program in a very procedural language, you will be produce roughly the same number of lines of code per day as if you programmed in a very declarative language. But of course you will go faster if you "shift up", if you use a more declarative language.

Another way of saying the same thing is "Don't force the programmer to put in comments what he could put directly in the source code, if his programming language was more expressive." (This is because good comments don't repeat the code, but explain the intention, what the programmer wants to do with the lines of code.)

Being more "declarative" for a programming language also means it is more efficient (you can write your program with less keystrokes). However, here I'm not talking about reducing keystrokes, but about making you "smarter" by letting you stay in the problem domain without going down to the level of bits and bytes and gotos.

Sometimes you can't even turn the "mental pedals" of your programming bicycle, because the "hill" (i.e. the problem you are trying to solve) is too "steep". This is when you'd like to be able to "shift down" to help your brain get moving. Here, a programming language can offer "patterns of solutions", or "heuristics to find a solution" (I'm not sure what words to use to say this).

I don't have much to say here (unfortunately, because I'd be really interested in hearing about this!). This "library" needs to make the patterns accessible, or even suggest a choice of more promising patterns (i.e. if these patterns exist, but you don't know about them, they can't help you).

Also, this rule is somewhat similar to the "code reuse" that is more part of the "completeness" rule. The difference is directly re-using an existing solution is not the same as being in front of a problem that has never been solved before, but for which you are offered a "pattern", a "heuristic" which then helps you find a solution for that problem.

Because "intelligence-boosting" is so important for a good programming language, in a way the history of programming languages is the history of the discovery of new ways to implement these "intelligence-boosting" rules. (And overworked and underpaid compiler writers could reply that historically that is why their job just gets harder every year!)

Let's look at the "Divide and conquer" rule ("magic knives, boxes and connectors"). For example, a function is a kind of "magic box" in which to hide algorithmic complexity, with the function parameters as "magic connectors". In object-oriented programming, a well-designed "class" or "type" is a kind of "magic box" that hides a lot of "private" complexity, and that shows only a few "public functions and variables" (i.e. the magic connectors). In a way, exceptions (in C++, Java, etc.) can been seen as a kind of "magic connector": instead of having many connections for all the things that can go wrong inside a "magic box", you only have one, a single "bloodvessel" that will transport all exceptional cases, one connector for the multitude of things that can go wrong.

If we look at the "make the language more declarative" rule, we can think of the invention of higher-level languages (like Fortran and COBOL), that liberated programmers from very low-level assembly language. Another example is object-oriented programming, where the programmer who is writing flight-control software can deal with "Flight Plans" and "Waypoints" and "Landing Corridors", instead of "if-then-else" and "while" clauses, etc.

And finally for this "intelligence-boosting" section, you can understand why I have so few rules, and why they are so poorly explained: if I could add a single really new rule in this section, or discover even one unforseen application of such a rule, I'd be rich and famous! (Well, at least famous among programming language geeks!)

What makes a programming language easy to learn? In general, "learnability" depends on quantity, quality and order. In other words, something will be easier to learn if:

- There isn't much to learn in the first place! For example,

memorizing one phone number is easier than memorizing a whole phone book.

- What is to be learned is itself "easy". Because of weakness of the human

intellect, this usually means the more tangible and concrete, the better.

- What is to be learned is presented to the learner in the right order.

There are many ways of increasing "learnability" by minimizing the quantity of what is to be learned:

4.1.1) Reuse what the learner already knows. All else being equal, if something is already known, and it works well, use that in your new language. Examples: the alphabet, basic mathematical symbols, English keywords, syntax and features of existing programming languages that work well and are well-known by most programmers, etc. Counter-examples: starting to count at zero instead of one (little children learn to count with 1, 2, 3, not 0, 1, 2). Or eliminating keywords and inventing a whole new series of symbols (like APL).

4.1.2) Avoid exceptions; keep the grammar regular. All else being equal, the fewer pitfalls and exceptions in the grammar of a language, the better. Another way of saying the same thing is "bang for the buck"; every time you learn something about this language, this new notion should "give you a lot of mileage".

Apart from avoiding obfuscation (i.e. what pompous academics do when they take simple notions and explain them with long and complicated words), there is only one way to increase "learnability" by maximizing concreteness: you have to associate concrete things to abstract ideas. Example: imagine a ghost riding a horse! The ghost symbolises the abstract idea you must learn, and the horse is something hairy and smelly and noisy that galops into your mind, carrying the abstract idea! There, now you have an image that will help you remember the importance associating a concrete quality to abstract things you want to teach.

Since we must maximize concreteness, we should try to make all aspects of a programming language more concrete. And since a programming language usually has keywords, operators and layout of source code, each of those aspects should be made as concrete as possible.

4.2.1) Keywords. Example: instead of using "raise" and "manage" for exceptions, use "throw" and "catch". It's easy to explain to students using the metaphor of a "hot potato" that you cannot handle, so you "throw" it. Somewhere else in the program, somebody has thick gloves because they know what to do with hot potatoes, so they will "catch" them. Counter-example: the keyword "let" to declare a variable. Why not the keyword "var" to declare a variable? Students might remember that one more easily.

4.2.2) Operators. Example: to dereference a pointer, it might be easier to remember "^" than "*". You can imagine "^" is a little arrow meaning "go to what this pointer is pointing to", whereas "*" doesn't have an obvious metaphor. Another example: if "*" means "multiplication operator", then "**" can mean "exponentiation". It's probably easier to remember that "**" means "multiply a lot" as in "raise to the power".

4.2.3) Layout of source code. All else being equal, the logical structure of the program should be reflected in the actual physical layout of the source code. All good programmers do this, even though their programming language often doesn't care (some languages, like Python, enforce the connection between physical layout and logical meaning).

Not only must the learning material be as far as possible in small quantity and tangible quality, but it must be presented in the right order:

- start at the beginning;

- elevate the learner with small steps (gradual learning curve);

- be forgiving;

- encourage good habits, discourage bad habits.

As far as I know, this means:

4.3.1) Correct learning sequence. Just as a good brick-layer starts by the bottom rows before trying to lay down higher rows of bricks, a good teacher respects the learning sequence, i.e. starting with simple and obvious things. (A counter-example might be Java, where even the simplest program requires you to see classes, functions, access specifiers, packages, etc., just to display "Hello world".)

Another meaning of "correct starting point for learning" is when a programmer has to learn a program written by somebody else (what is sometimes called "groking", or "spelunking"). The programming language should help with this learning process (Ben Shneiderman would say: "Overview first, zoom and filter, then details-on-demand").

4.3.2) No big "leaps". It should be possible to learn everything about this programming language gradually, adding all the notions, one by one, without having big "mental steps" between each successive new notion.

Another way of talking about a "gradual learning curve" is the "onion layer" metaphor. When you start using a feature (an "onion") of the language, you first encounter an easy interface which lets you do a lot of easy stuff you might want to do. Then, when you are ready, you can "peel off another layer" and expose more complication, but also expose more power. (And if you "peel off" too much too quickly, it will make you cry!)

4.3.3) Be forgiving. Learning requires attempts. If the honest student who really wants to learn is constantly punished for trying to learn, he'll probably stop learning. The programming language should make it hard to make mistakes, make mistakes easy to detect, minimize the negative consequences of mistakes, have an "undo", etc.

4.3.4) Encourage virtue, discourage vice. A good programming language should encourage good programming habits in new programmers (and rap the fingers of old misbehaved programmers with a wooden ruler! ;-) Since we are talking about programming languages, this usually means having a syntax that is easier to type and prettier to look at when you are "doing the right thing", and more painful and ugly as you "misbehave" more (perhaps even declaring an error and refusing to compile if you do something really nasty). Yes, this means the programming language can be too permissive, or too strict, or just plain misguided (only the truly virtuous can teach virtue). But it is inevitable: each programming language ends up influencing its users (just like teachers always end up influencing their students). So as far as possible, the influence should be good.

Be careful. This idea of "encouraging programming virtue" doesn't mean being horse-whipped by the curly-brace nazi for lining them up your way and not theirs. It means sucking fresh concentrated computer science milk from the nursing bottle of a motherly programming language: you become a better programmer with very little effort.

"Efficiency" can be defined as getting the same result with less effort. Because programming languages target both men and machines, they should be "efficient" for both men and machines:

- On the part of the computer, being "efficient" means doing the same thing with less

memory and CPU cycles.

- On the part of the programmer, being "efficient" means doing the same thing with

less keystrokes on the keyboard.

First, a quick disclaimer about what we are not talking about here: advances in CPU design, better operating systems, compilers with better optimizations, etc. They are very important, but outside the topic of programming language design.

How can a programming language increase the efficiency of computers (i.e. use less "computer resources", like memory, CPU cycles, parallel processors, etc.)? How can we avoid programs that are like a herd of cows crossing a river of molasses in the Wintertime?

Resource allocation is a tough problem, and requires everybody's collaboration (in our case the programmer and his programming language must collaborate). The general rules are:

- don't use something if you don't need it;

- if you do need something, try to give advance warning;

- if several things could satisfy your need, pick the least expensive option;

- don't hang on to anything longer than necessary.

Here are some examples for each category:

5.1.1) Don't use something if you don't need it. For example, if large portions of code are going to be duplicated, the programming language should make that obvious to the programmer. (In C++, using templates incorrectly often leads to such "code bloat".) Another example is mandatory "automatic garbage collection" (i.e. all variables are allocated dynamically on what is called "the heap", which takes more memory and CPU cycles, all else being equal). Sometimes the programmer knows exactly who owns what chunk of memory, and when that memory is no longer used, so he should not have to pay for a feature he won't use.

5.1.2) If you do need something, try to give advance warning. For example, instead of just plopping a large amount of work on the compiler's lap with a Post-It saying "To be executed", the programmer could say: "Hello Mr. Compiler! I followed your suggestion and divided the work into two boxes. This box contains stuff that can only be done at runtime, but this other box contains stuff I'm pretty sure you could calculate right now, during compile time, so that would save CPU cycles during execution".

Another example is "branch prediction": a programmer might know that such a branch of an "if-then-else" or "switch" statement will be far more frequent. Since incorrect "CPU branch-prediction" has such negative consequences on execution speed (flushing of memory caches, rollback of speculatively-executed instructions, etc.), efficency can be increased if the language allows the programmer to pass this information on to the compiler (perhaps by adopting the convention that the "if" clause will be more frequently executed than the "else" clause, etc.).

5.1.3) If several things could satisfy your need, pick the least expensive option. First, if there are many options, the programming language should not keep this a secret! Also, the language should make obvious the "cost" (in memory or CPU cycles) of each option, and help the programmer chose the best given the circumstances. (Perhaps by having a syntax that gets more verbose and ugly, as the cost of the option goes up.)

5.1.4) Don't hang on to anything longer than necessary. For example, don't freeze up the GUI because you're busy doing some background calculation. As soon as you have the user's input, fork off that task on a different thread, and let the user continue to interact with the GUI Another example is releasing memory as soon as you're finished with it.

The number of keystrokes can be classified into three categories: zero, as few as possible, and way too much:

5.2.1) Don't force the programmer to write what the compiler can figure out by itself. A common example in many modern languages is the type of a variable which is deduced from the initial value. You don't need to specify "string" or "integer" or "fraction" if the initial value is "hello" or "78" or "0.34422". Also, making the language more expressive has the wonderful side-effect of making the compiler "magically" type many things for you.

5.2.2) The more frequent, the shorter. Short words are easy to pronounce, but there aren't many of them (e.g. if you only have two letters, you cannot make as many words as if you have a dozen letters). The solution normally used by natural languages is that more frequently used words are short, and words that are rarely needed are longer. So you have "me", "bread", "go", "water", but "anticonstitutionally", "subdermatoglyphic", and "pseudopseudohypoparathyroidism". In programming languages, for example, rarely used keywords can be longer, and very frequent keywords should probably be expressed with something even shorter than a keyword: an operator (which is a keyword, but normally expressed with one or two symbols instead of an English word). Another example is "smaller namespaces". If you have two hammers in the house, one in the shop in the basement (for nails), and another in the shed outside (a cheap hammer with a rickety handle) just for closing paint cans, you could distinguish them with really long names like "hammer_in_the_basement" and "hammer_in_the_shed". But if you're smart, you'll design your programming language so that just "hammer" gets you the right one, depending on whether you're in the shed or the basement.

You could say this rule has several "corollaries". First, if it's possible to have a default value for something, then the most frequently required "word" should be that default. A counter-example of this is in C++ when you have to explicitely specify "public" inheritance, when you almost always want "public" inheritance anyway.

Another "corollary" is that, because of the way our hands are made, and the way our keyboards are layed out, some keys are easier to type than others, so the easier things to type should be the ones more frequently used.

5.2.3) Don't force the programmer to repeat what he has written already. This rule includes all language mechanisms which allow the programmer to only "write things once", or "eliminate redundancy". One of the first (and still excellent) examples of this is the humble subprogram, which can help eliminate duplicated code. Another example is "CSS style sheets" in web programming. Another example could be the "semi-colon": many older languages require a "line terminator", like a semi-colon ";". But the programmer always hits "Enter" ("carriage return" for old-fashioned typewriters) at the end of the line. The programmer has already expressed his intention to end the line. Why force him to add a semi-colon?

Since programming languages must be understood both by men and by computers, they should be clear to both men and machines. But in practice, a programming language is normally "clear" for the computer (the computer will "understand" each operation code and execute it). So we'll focus here on clarity for men.

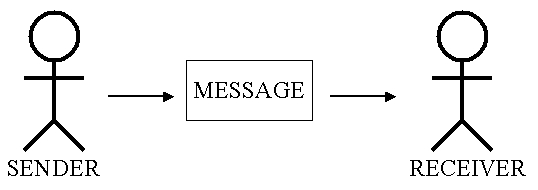

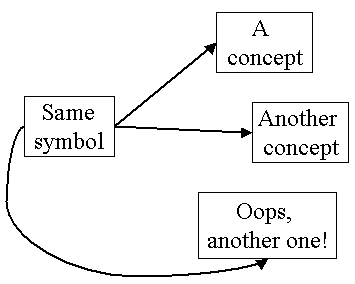

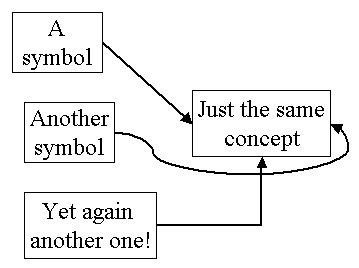

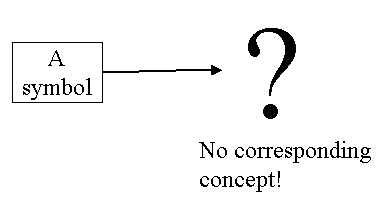

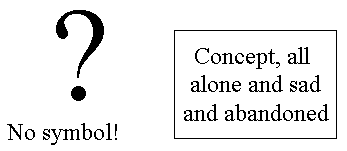

We know if clarity is missing, the programmer isn't sure what he's telling the computer to do, and other programmers who are looking over his shoulder can't figure that out either. But what is "clarity"? Programming languages are (among other things) associations between symbols and concepts, formed in us by habit (i.e. the repetition of acts, acts of seeing this symbol associated with that concept). The "strength" of these associations can be called "clarity", and "clarity shortcomings" can be organized around how such associations can be broken or made weaker.

6.1) One-to-one mapping between concepts and keywords. In a good programming language, each concept should have it's own keyword (or operator, or other representation).

6.2) Different concepts should not share the same symbol. Counter-example: the "static" keyword in C++ which has several unrelated meanings.

6.3) What appears different should be different, what appears the same should be the same. In other words, maintain a good "Hamming distance" (between keywords, operators, function prototypes, etc.). Counter-example: in C++, a little "const" somewhere in a function prototype makes that function completely different.

6.4) The same concepts shouldn't have many different symbols. For example, in C++, you have "class" and "struct" which mean essentially the same thing.

6.5) The same things should always be in the same places. This particular rule is related to human psychology. If we strongly expect things to be in certain places, we will be confused, even if everything is there, but in a different place. You probably have felt this if you tried cooking a simple meal in somebody else's kitchen: you know their kitchen is well-equipped, so everything should be there, but you have no idea where the potato peeler is.

For programming languages, it's even more striking. Even when we finally find (the programming equivalent of) our potato peeler, it still seems unfamiliar. In programming, the meaning of things somehow gets tangled up with their location. You can observe this when a programmer tries to understand another programmer's source code: sometimes it feels like it's written in another language, even though it isn't, and code does what it's supposed to do, and doesn't have any bugs.

6.5) The meaning of a name should be stable. Counter-example: some programming languages provide mechanisms to make it very easy to rename things (like Eiffel, but C's "typedef" is even worse). It's quite frustrating to try to understand some source code, only to find out that what we thought was different is actually the same thing.

6.7) Avoid "noisewords". The expression "noise word" is related to "keywords". Some languages have keywords that don't actually have any related meaning (or very little meaning), i.e. they are a lot of "noise" and little "signal". For example in languages like Pascal and Ada you have "then", "do", etc.

6.8 ) Avoid "orphan concepts". In a way, this category of "lack of clarity" is another way of saying: "Discover all there is to discover about programming languages", which of course I have no idea how to do. But in a weaker sense it can also mean: don't have concepts that can only be expressed with complex and subtle syntactic constructions only the parser clearly detects; try to "crystallize" them in something more tangible, like a keyword or operator.

Again in theory, because programming languages target both men and machines, they should be "complete" for both. In practice, machines are much simpler than men, so being "complete" in the sense of "being able to talk to all the hardware" is fairly easy, so we'll have just the first rule for that. The other "completeness" rules are more for the programmer himself:

7.1) Allow access to the computer hardware. This includes the CPU (threads and processes, general registers, special registers like the "PSW", etc.), the various kinds of memory (stack, heap, "text" i.e. the program file), mass storage (solid-state drives, "spinning rust" drives, optical disk, etc.), peripheral hardware (clock, monitor, keyboard, sound card, microphone, camera, GPS, fingerprint, readers, etc.).

7.2) Allow access to all "programming paradigms". Structured programming, ADT (Abstract Data Type) programming, object-oriented programming, generic programming, CBSE (Component-Based Software Engineering), etc.

7.3) Allow access to the work of other programmers ("code reuse"). In a way, once something is well-programmed, it becomes part of the "programming vocabulary". (It is also one of the fastest and most efficient ways of programming, since you don't program! Hence the old programmer's tongue-in-cheek advice: "As far as possible, steal code!"). A programming language must give access to this new extension of the "programming vocabulary". And since the expression "work of other programmers" can have many meanings, then access should ideally be allowed to programming work done:

- in various stages of completion (from simple "code snippets" to full

application frameworks, and everything in between);

- in various stages of "runnability" (i.e. source code, interpreted bytecode,

static and dynamic libraries, etc.)

- using programming tools from other vendors (compilers, linkers, profilers,

IDEs, etc.);

- in other programming languages;

- for other operating systems;

- on other computer hardware;

- using other conventions (ASCII vs. Unicode, big-endian vs. little endian, etc.);

- etc.

7.4) Allow access to things that have not been invented yet (Forward compatibility). Because new "computer science things" are constantly being invented, a programming language that doesn't allow access to these new things will be less and less "complete", and will eventually "die" because no programmer will want to greatly limit his "vocabulary" by using this "old language". So ideally, a programming language should be designed to be "compatible with the future", to have "room for growth".

For a language, being "Widely-used" is a "virtuous circle":

- because more people use it, communication is facilitated between more people,

so more people want to start to use this language;

- because more people use it, it becomes more profitable to develop products for that

language (books, courses, tools, etc.), so more products are developed, which means

more people want to use that language, because more products support it;

- because more people use it, and it's so well supported by products, employers

want to hire more employees who know that language, so more people want to start to

use this language;

- because more people use it, more users complain about the deficiencies of

this language, and more smart people with good language design ideas

study solutions for these deficiencies, so the language often improves more,

which attracts more users to this language;

- etc.

In a way, "widely-used" is not a language design rule, but a consequence, a "reward" for respecting all other language design rules. So why include it in this list? Because "language design rules" can be divided into "internal" and "external", i.e. design rules that improve the "internal qualities" of a language, and those that are "external" to the language, even though they help increase the number of users (which, as we've just said, ends up improving the internal qualities of a language).

So what can be done, which will increase the number of users, but without actually modifying the language itself? Marketing. Marketing is a domain separate from programming language design, but marketing had to be mentioned, since it's so important for the success of a new language. Not much will be said here, except to recall some general marketing rules (which are certainly better explained elsewhere by marketing experts):

8.1) Make a good first impression. When a potential customer downloads your product and tries it for the first time, you'd better not make the experience painful and frustrating. Underpromise and overdeliver.

8.2) Provide good customer service. For a programming language, "good customer service" means for example good error messages from the compiler. (You can almost hear the programmer saying: "Hey, my programming language is broken! Don't make me wait in line! Fix your product now, or give me my money back!"). Another example: don't insult your customer. (A programming language should not tell programmers how to do their job, especially since most of them are probably more competent than the language designer anyway. Better to stick to humble suggestions, when you are really sure about what you're saying.)

8.3) Have good timing. The best product in the world will probably fail if it arrives on the market at the "wrong time" (for example, after a blockbuster product corners that market segment, or before potential customers realize they have a need that this new product can satisfy, or before the technology contained in this new product is sufficiently developed, etc.)

8.4) Choose your initial target audience carefully. Marketing experts use Sociology, among others to distinguish between various groups of people. For example, some groups are naturally more open to new programming languages, more avid to carefully read documentation, more capable of producing high-quality source code, etc. (These are sometimes called "your champions", the people who you prefer to have as "missionnaries" of the "good news" of your new language.) Also, some groups are probably too small (like the group of very rich people with the lastest and greatest super-powerful computers that can easily run a very complicated programming language, as opposed to the very large group of people with ordinary and boring computers that can only use a less-sexy and less-complicated language).

8.5) Remember many programmers are not native English speakers. Computers speak machine language, not English or French or Chinese. But for a programming language to become widely-used, it helps if it's more open to other natural languages (for example, translated tutorials and reference manuals, support for internationalization of the software produced with this programming language, etc.).

8.6) Build a good brand image. The name of your product should be easy to pronounce, memorable, different, etc. Have a simple and good-looking logo. Associate celebrities to your product. Sponsor relevant events that attract many potential customers. And so on.

This section doesn't really add new design rules. It just repeats many important ones, but from a different point of view. (I hope that point of view is more useful and objective than just my pet peeves!)

One of the reasons engineering is difficult is that there are usually several objectives which must be met, and the objectives are mutually exclusive. For example, an engineer might be told to design a car that is fast, safe, fuel-efficient and inexpensive. Well, the best way to go fast is to add a bigger engine, but that uses more fuel! And the best way to be safe is to go slowly! Not to mention price, since a bigger engine is more expensive, etc.

Designing a programming language is an engineering effort, so a good language will always have calculated compromises. For example, "Reducing keystrokes" eventually leads to a language that is so cryptic that it's hard to read (i.e. it's no longer clear). Or trying to make a new language easy to learn by making it very familiar, might make it difficult to add never-before-seen features which nobody is familiar with (but would have greatly increased expressiveness of the language). Or a language optimized for speed of construction (i.e. a "scripting language", also known as "programming duck tape") will probably not be optimized for speed of execution or type safety. Even the kind of programming language is a compromise: general-purpose languages tend to be no better than second best for solving any problem (because the best would be a language specialized for that specific kind of problem). And so on.

A common engineering mistake is to drop one or more requirements. This allows spectacular improvements, apparently. For example, if the engineer designing the car drops affordability, he will easily come up with a super-fast car that sips fuel and protects the passengers even in horrible crashes. Only the car will be so expensive nobody will be able to buy it! For programming languages, pick any one of the language design rules here above, drop it, and you'll probably be able to make spectacular gains, apparently.

So what is the right way of doing things? I suspect the answer is in Engineering books, where experts would explain things like: "Find all options. Find all pros and cons for each option. Quantify the critical attributes of the desired solution. Chose the best set of compromises." And also: "Learn from other people's mistakes. Avoid design-by-committee." Etc, etc.

Software is not like ordinary material reality, so you can easily do things in software that would be ridiculously difficult otherwise. In reality, you normally build a house out of pieces of lumber, nails and screws, coverings (like asphalt shingles for the roof), copper and plastic for the electrical wires and the plumbing, etc. Now imagine somebody who would build an entire house out of just nails: the walls would be nails neatly stacked up, the floors would be like big carpets of nails, the roof would be made of nails glued together in nice rows, like tiny little roofing tiles, etc. But you could also build a house entirely out of wood (with a wooden kitchen sink, wooden hinges for the doors, wooden pipes for the water, etc.) You could do the same, but using only windows: the walls would be just a vast array of windows, same with the roof, same with the floors (using really thick glass), etc.

In programming, many language designers decide to build programming languages out of a sub-set of available materials (perhaps because they understand some better than others, so are more confortable with them). They will invent a language that has only functions and no variables, or no functions and only variables, or no keywords and only operators, etc. Then they will self-congratulate for creating such a "simple" programming language (while avoiding topics like "the easy things their language cannot do and other languages can", or "the additional complexity that they have conveniently swept under the rug, while they showed their guests around their wonderfully simple living room", etc.).

That being said, Aristotle, Voltaire, Saint-Exupéry and others have said: "Perfection is when you cannot remove anything anymore". A simpler programming language will be better, all else being equal. (The hard part is of course "all else being equal", i.e. removing something without making the programming language worse.)

As mentioned above, communication between man and machine is the easy part. It's communicating between men which is hard. Coming up with a language that compiles and does what it's programmed to do is not a reason to celebrate. (Kreitzberg and Shneiderman famously said it another way: "Programming can be fun, so can cryptography; however they should not be combined.") My favorite example is this program which prints out the words to the song "99 bottles of beer", written in a programming language whose name should not be pronounced.

That being said, the very nature of a language involves a conventional (i.e. arbitrary) association between sign and signified. So what appears as stupendously weird and incomprehensible to someone can be a crystal-clear delight to another. The simple fact something appears insane is not sufficient to conclude a programming language is bad.

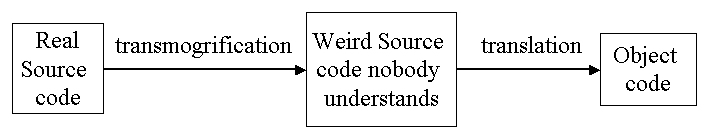

Simplistically, a programming language takes "source code" and transforms it into "machine code", which is then executed by a computer. (Actually, it's the compiler that "translates" the source code, and the result can also be an "object code" that cannot be directly executed by a computer, but let's keep it simplistic for now.) So the programmer figures out what he wants to say and types it up as source code in a programming language.

But some programming languages then take that source code and fiddle around with it, generating another source code, which only then is translated into the final result. In a way this is perverse, like a dog eating his own vomit. The programmer has already said what he wanted to say, using that programming language. If the source code needs to be rearranged into an entirely different source code, before it's translated to object code, then the programming language is deficient. Why wasn't the programmer allowed to just say directly what needed to be said? To add insult to injury, the compiler often complains about errors in the "transmogrified" source code, which the programmer has not written, and often doesn't understand!

That being said, the "preprocessor" or "macros" are a simple way of working around the limitations of a programming language. So historically, new features often start by being "macro hacks" before they are correctly integrated into a new programming langage. I'm not saying the preprocessor is intrinsically evil; I'm saying a language designer should feel bad when people reach for the preprocessor. A bit like a restaurant chef who should feel bad if all his customers reached for the ketchup bottle in order to be able to swallow the food he cooked.

Me? Have an overly exalted opinion of my own programming language design abilities? Oops...

Some sources, in roughly decreasing order of importance (at least for me):

Bjarne Stroustrup,

The

Design and Evolution of C++,

1994.

Robert W. Sebesta,

Concepts

of Programming Languages,

2012.

Raphael Finkel,

Advanced

programming language design,

1996.

Charles Antony Richard Hoare,

The

emperor's old clothes,

1980.